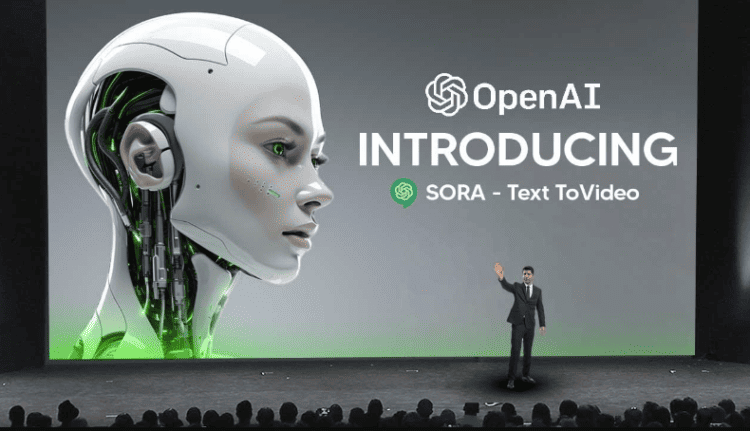

OpenAI‘s groundbreaking Sora video generator, capable of transforming text into lifelike videos, has garnered both admiration and apprehension within the AI community. While hailed as a leap forward in AI technology, concerns loom large over the potential ramifications of deepfake proliferation, particularly in the context of the upcoming global elections in 2024.

The Sora AI model represents a significant advancement, enabling the creation of videos up to 60 seconds in length using textual prompts or a combination of text and images. From picturesque scenes of Tokyo streets to surreal scenarios like airborne sharks amidst city skyscrapers, Sora demonstrates a remarkable level of realism that blurs the line between fiction and reality.

According to Hany Farid, a renowned expert at the University of California, Berkeley, advancements in generative AI raise the specter of increasingly convincing deepfakes, amplifying concerns over their potential misuse. The integration of AI-powered voice cloning further compounds these anxieties, potentially facilitating the creation of fabricated content with alarming authenticity.

Built upon OpenAI’s existing technologies such as DALL-E and GPT language models, Sora employs a sophisticated blend of diffusion and transformer architecture to enhance realism. Despite occasional glitches like misplaced limbs or floating objects, Sora’s simulations are lauded as a significant improvement over previous iterations, as noted by Rachel Tobac, co-founder of SocialProof Security.

Arvind Narayanan, a scholar at Princeton University, warns of the challenges posed by the evolving landscape of deepfake detection. While current glitches may offer some respite, long-term adaptation strategies are deemed imperative to counter the evolving threat.

Recognizing the potential for misuse, OpenAI has adopted a cautious approach, subjecting Sora to rigorous testing by domain experts in misinformation and bias. The company’s proactive measures include “red team” exercises aimed at identifying vulnerabilities and mitigating risks before making Sora publicly available.

However, concerns persist regarding the dissemination of AI-generated deepfakes, particularly in the realm of political propaganda and online harassment. With misinformation posing a significant threat to societal discourse, collaborative efforts between AI companies, social media platforms, and governments are deemed essential to curb the spread of fabricated content.

Looking ahead, the integration of unique identifiers or “watermarks” for AI-generated content emerges as a potential defense mechanism against deepfake proliferation. As OpenAI deliberates on the future release of Sora, prioritizing safety measures remains paramount, especially in the context of the unprecedented global elections slated for 2024.

Check out even more Sora creations:

1.

2.

3.

Why not check out… Introducing “Epstein Island”: A New Survival Adventure Game